Artificial intelligence predicts direct-acting antivirals failure among hepatitis C virus patients: A nationwide hepatitis C virus registry program

Article information

Abstract

Background/Aims

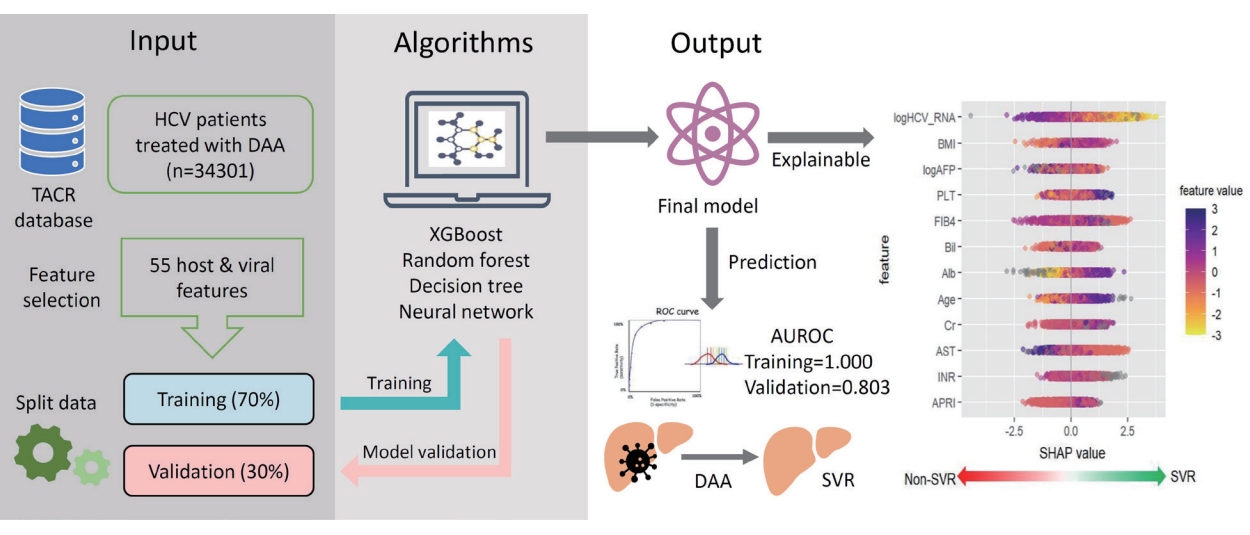

Despite the high efficacy of direct-acting antivirals (DAAs), approximately 1–3% of hepatitis C virus (HCV) patients fail to achieve a sustained virological response. We conducted a nationwide study to investigate risk factors associated with DAA treatment failure. Machine-learning algorithms have been applied to discriminate subjects who may fail to respond to DAA therapy.

Methods

We analyzed the Taiwan HCV Registry Program database to explore predictors of DAA failure in HCV patients. Fifty-five host and virological features were assessed using multivariate logistic regression, decision tree, random forest, eXtreme Gradient Boosting (XGBoost), and artificial neural network. The primary outcome was undetectable HCV RNA at 12 weeks after the end of treatment.

Results

The training (n=23,955) and validation (n=10,346) datasets had similar baseline demographics, with an overall DAA failure rate of 1.6% (n=538). Multivariate logistic regression analysis revealed that liver cirrhosis, hepatocellular carcinoma, poor DAA adherence, and higher hemoglobin A1c were significantly associated with virological failure. XGBoost outperformed the other algorithms and logistic regression models, with an area under the receiver operating characteristic curve of 1.000 in the training dataset and 0.803 in the validation dataset. The top five predictors of treatment failure were HCV RNA, body mass index, α-fetoprotein, platelets, and FIB-4 index. The accuracy, sensitivity, specificity, positive predictive value, and negative predictive value of the XGBoost model (cutoff value=0.5) were 99.5%, 69.7%, 99.9%, 97.4%, and 99.5%, respectively, for the entire dataset.

Conclusions

Machine learning algorithms effectively provide risk stratification for DAA failure and additional information on the factors associated with DAA failure.

Graphical Abstract

INTRODUCTION

Direct-acting antivirals (DAA) have changed the treatment landscape for patients infected with hepatitis C virus (HCV). However, despite the DAA efficacy being up to 97% across all HCV genotypes, approximately 1–3% of HCV patients fail to achieve a sustained virological response (SVR) [1,2]. Factors associated with DAA failure generally include decompensated liver cirrhosis, resistance-associated substitutions (RASs), the presence of hepatocellular carcinoma (HCC), prior treatment failures, and poor drug adherence [3,4]. As comprehensive HCV elimination programs are advocated worldwide, an increasing number of patients with HCV infection are expected to require DAA salvage therapy. Thus, all the risk factors associated with DAA failure must be considered simultaneously to reduce the retreatment burden.

Artificial intelligence (AI) emerged as a powerful tool for disease diagnosis and risk assessment in healthcare. Factors contributing to treatment failure vary among individuals, making such heterogeneous data and complex interactions difficult to evaluate through regression methods. Moreover, conventional statistical methods can only handle linear data. Alternatively, machine-learning (ML) approaches can process both linear and nonlinear information and recognize the hidden relationships between variables and outcomes in big data [5]. ML algorithms can be classified into supervised and unsupervised algorithms. Supervised ML is suitable for handling annotated data, whereas unsupervised ML can process datasets that lack class labels. Common supervised ML algorithms include decision trees (DT), random forest (RF), eXtreme Gradient Boosting (XGBoost), and artificial neural network (ANN) [6,7]. Thus, AI provides a new approach to understanding diseases by integrating multidimensional data with “automatic learning” [8]. Advances in AI have made it possible to serve as a decision-support tool and improve diagnostic quality in healthcare.

We conducted a real-world, multicenter study using the Taiwan HCV Registry (TACR) database, aiming to explore the risk factors associated with DAA failure using artificial intelligence. We applied artificial intelligence to quickly distinguish HCV patients prone to virological failure.

MATERIALS AND METHODS

Subjects

The TACR Program is a nationwide HCV-registered platform implemented by the Taiwan Association for the Study of the Liver since 2020 [9]. The TACR conducted a real-world, multicenter, prospective cohort study of DAA therapy. A total of 34301 chronic hepatitis C patients >=18 years old who received DAAs with available SVR12 data were enrolled in this study. Patients with HCV who died during treatment or were lost to follow-up within 12 weeks after the completion of therapy were excluded from our study. The baseline demographics and virological characteristics before and after antiviral therapy were recorded in the TACR database. The primary outcome was the achievement of sustained virological response (SVR12), defined as undetectable HCV RNA in the serum after 12 weeks of end-of-treatment. The choice of antiviral regimens followed the international HCV treatment guidelines [10,11] and the reimbursement criteria of the Taiwan National Health Insurance Administration [2]. This study was approved by the Institutional Review Board of Kaohsiung Medical University Hospital and adhered to the Declaration of Helsinki. Written informed consent was obtained from all the participants.

Machine learning models

The subjects were randomly assigned to a 70% training dataset (n=23,955) and a 30% validation dataset (n=10,346). Fifty-five host, virological, and on-treatment features were input into the ML models (Supplementary Table 1). The algorithms included DT, RF, XGBoost, and ANN. ML analysis was performed using the rpart, randomForest, xgboost, and neural network packages of R software (https://www.r-project.org/). As some algorithms cannot handle missing data, missing data (14.3%) were imputed using the k-nearest neighbor method before generating predictions [12]. The best model was used for risk stratification of patients with DAA treatment failure. Patients were further divided into subgroups using deciles of predicted risk probability to allow for more granular management of high-risk patients.

The performances of the ML models were assessed using the area under the receiver operating characteristic curve (AUROC), accuracy of the confusion matrix, precision-recall curve, and F1-score [13]. A precision-recall curve closer to the upper-right corner indicates better performance. The F1-score is the weighted average of precision and recall and is favorable under class imbalance in the dataset. The F1-score ranged from 0 to 1; the predictive model with an F1-score closer to 1 is considered better. The Delong test was used to compare the differences in the AUC of the ROC curves [14]. The codes for the ML models are presented in the Supplementary Materials.

Statistical analyses

Student’s t-test was used to compare continuous variables. Categorical variables were evaluated using the chi-square (X2) or Fisher’s exact test. Multivariate logistic regression analysis was performed to determine independent risk factors associated with treatment failure. Data were analyzed using the Statistical Package for the Social Sciences software (SPSS, version 26; IBM Co., Armonk, NY, USA). Statistical significance was defined as a two-tailed P-value <0.05.

RESULTS

Baseline demographics

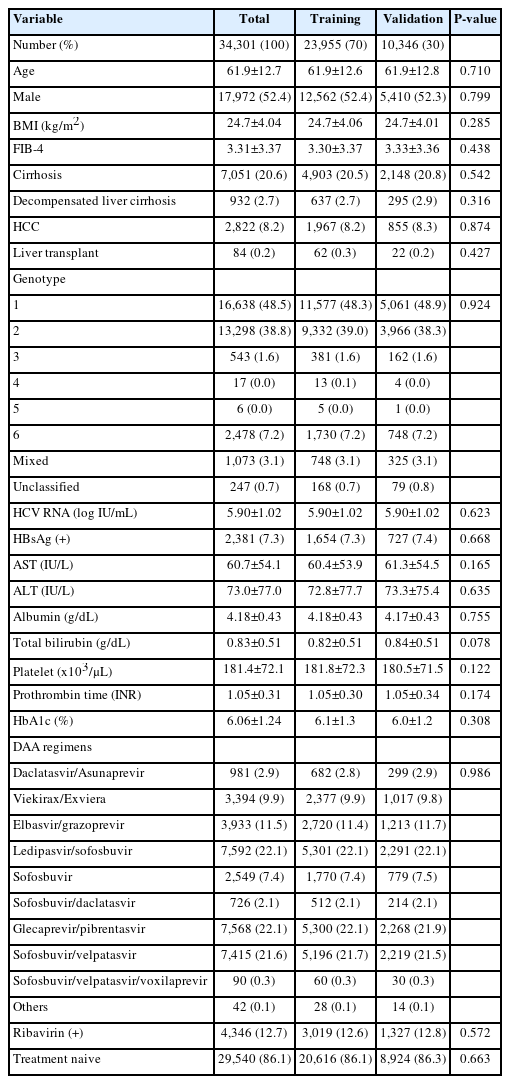

The baseline demographics of the study participants are presented in Table 1, with no significant differences in age, sex, body mass index (BMI), biochemical data, cirrhosis, HCV genotypes, viral load, DAA regimens, HBV coinfection, or presence of HCC between the training and validation datasets.

Logistic regression analysis of the factors associated with DAA treatment failure

The overall DAA failure rate was 1.6%. In the univariate analysis, female, fibrosis-4 index (FIB-4), cirrhosis, decompensation, presence of HCC, HCV genotypes, higher HCV viral load, protease inhibitor-based DAA regimens, treatment experience, less DAA/ribavirin adherence, and severe adverse effects significantly increased the risk of DAA treatment failure. In biochemical examinations, lower albumin, platelet, and creatinine levels significantly increased the probability of non-SVR. Elevated aspartate aminotransferase (AST), bilirubin, prothrombin time, and hemoglobin A1c (HbA1c) levels significantly increase the likelihood of virological failure. In the multivariate analysis, the presence of cirrhosis and HCC, higher HbA1c, and less DAA adherence were independent risk factors for DAA treatment failure after adjustment for the variables with P-value <0.05 in the univariate analysis (Table 2).

We developed a conventional prediction model using logistic regression as follows:

Logistic regression (LR) model=3×Liver cirrhosis (yes=1, no=0)+4×HCC (yes=1, no=0)+1×HbA1c+33×DAA adherence (<20%=5, 20–40%=4, 40–60%=3, 60–80%=2, >80%=1)

The components of the LR model were the four independent risk factors in the multivariate logistic regression analyanalysis. The coefficient for each variable was derived from the odds ratio of the multivariate logistic regression analysis. The cutoff value for discriminating DAA failure was set at 40 using Youden’s index in the ROC curve analysis.

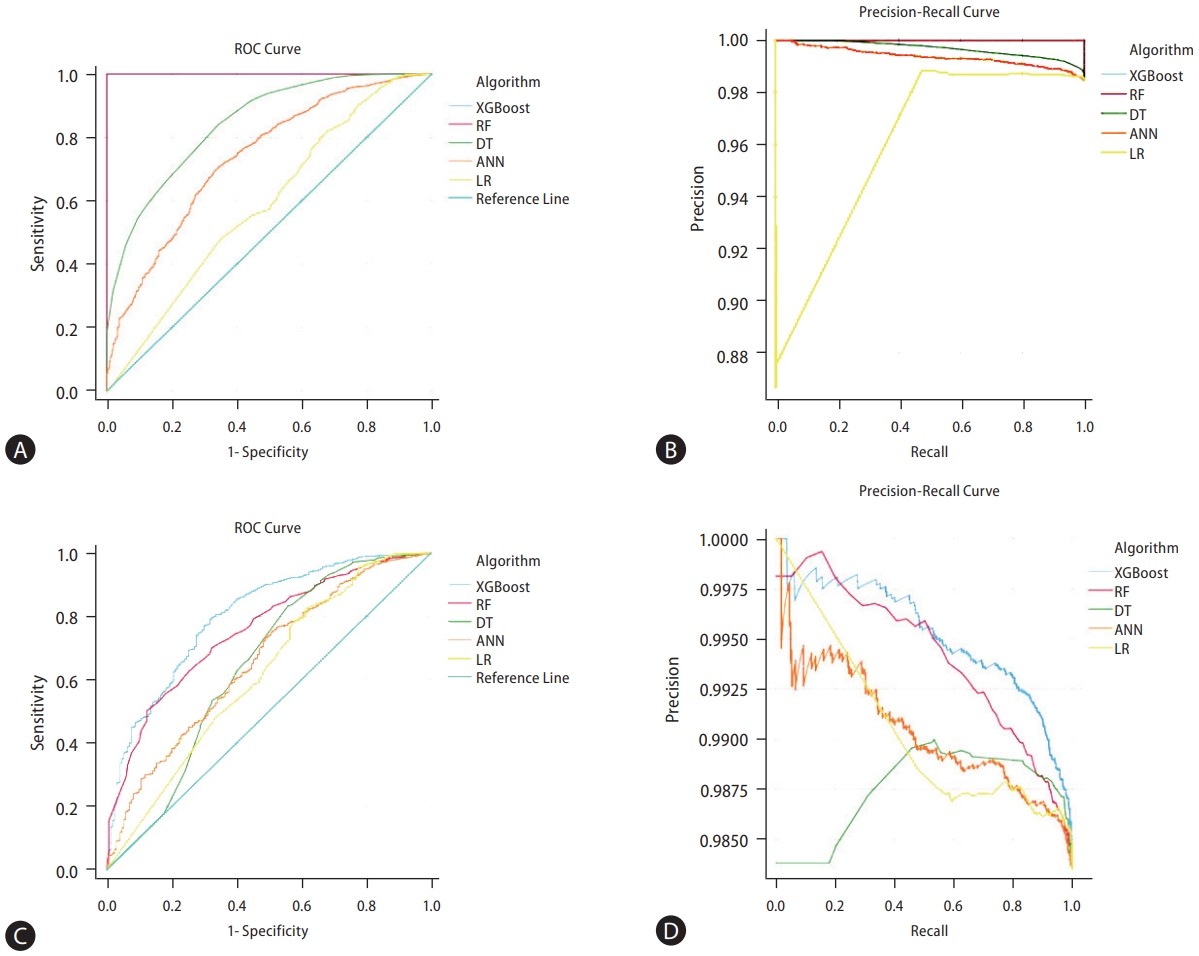

Performance of the predictive models

In the training dataset, the AUROC was 1.000, 1.000, 0.845, 0.736, and 0.588 for the XGBoost, random forest, decision tree, artificial neural network, and logistic regression models, respectively. The accuracy, precision, and recall rates of the prediction were 100% for both XGBoost and random forest. The F1 score achieved 1.00 in both the XGBoost and random forest algorithms (Fig. 1A, B, and Table 3).

Performance of the predictive models. Figure 1 shows the ROC curves and precision-recall curves of the eXtreme Gradient Boosting (XGBoost), random forest (RF), decision tree (DT), artificial neural network (ANN) algorithms, and logistic regression (LR) models in the training dataset (A, B) and validation dataset (C, D). A precision-recall curve closer to the upper-right corner indicates better performance.

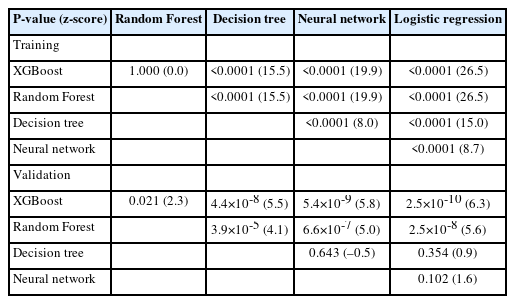

In the validation dataset, the AUROC was 0.803, 0.756, 0.644, 0.658, and 0.616 for the XGBoost, random forest, decision tree, artificial neural network, and logistic regression models, respectively (Fig. 1C, D, and Table 3). The Delong test revealed the performance of XGBoost was superior to the random forest (P=0.021), decision tree (P=4.4×10-8), artificial neural network (P=5.4×10-9), and logistic regression model (P=2.5×10-10) (Table 4). The accuracy, precision, recall, and F1-score of XGBoost are 98.3%, 98.4%, 99.9%, and 0.992, respectively.

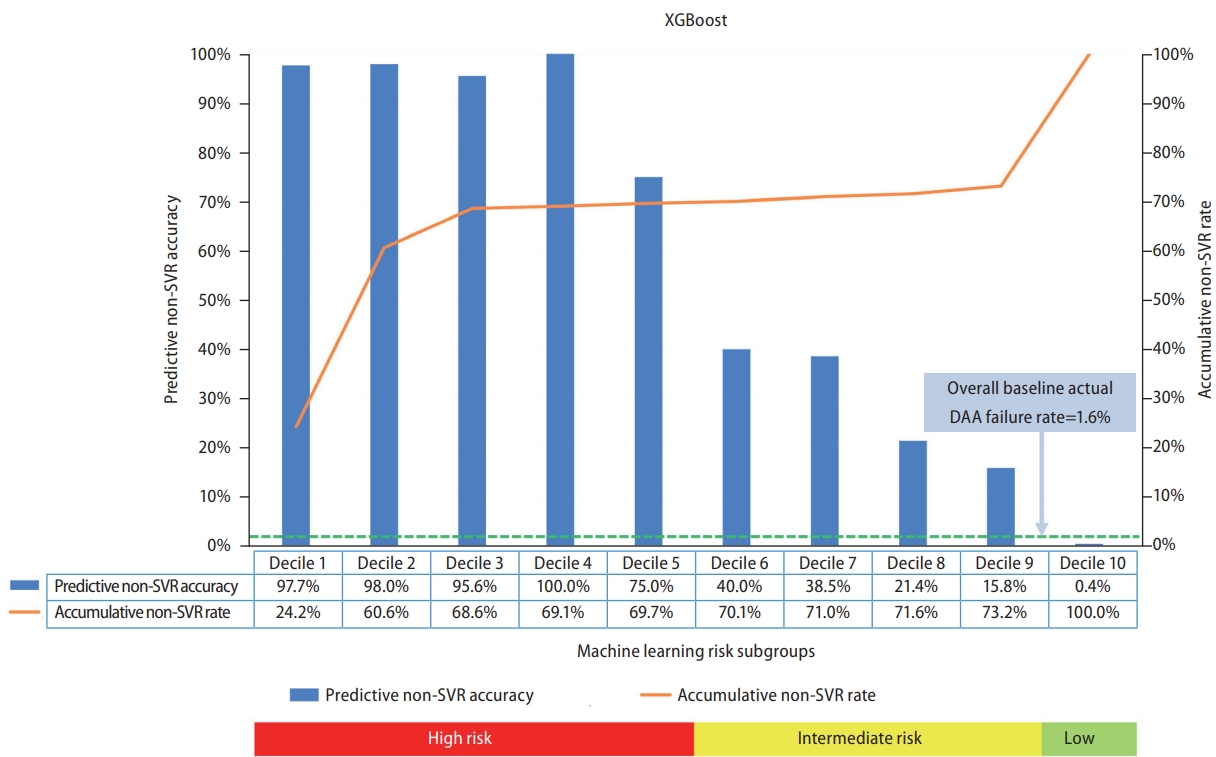

Risk stratification based on the XGBoost algorithm

The overall HCV patients receiving DAA treatment were further stratified according to the XGBoost prediction results. XGBoost provides a risk coefficient between 0 and 1 for each case. The higher the coefficient, the higher the chance of achieving SVR. DAA efficacy was divided into ten subgroups based on risk coefficient deciles. Figure 2 shows the predicted non-SVR accuracy for each subgroup using the XGBoost algorithm. The participants were stratified into high-risk (decile 1–5), intermediate-risk (decile 6–9), and low-risk (decile 10) populations based on the risk coefficients. The DAA failure rate was 75–100% in the high-risk, 15.8–40.0% in the intermediate-risk, and 0.4% in the low-risk populations. The DAA failure rate among the top five deciles was substantially higher than that at baseline (1.6%). The accumulative non-SVR rate was 69.7% in the high-risk population. Among the 538 subjects for whom DAA treatment failed, 375 (69.7%) were successfully detected using the XGBoost model among the top five deciles (Fig. 2). When the cutoff value of the risk coefficient was set at 0.5, the accuracy, sensitivity, specificity, positive predictive value, and negative predictive value were 99.5%, 69.7%, 99.9%, 97.4%, and 99.5%, respectively (Supplementary Table 2).

DAA treatment failure rate by decile risk subgroups assessed using the XGBoost model among overall cases. The overall HCV patients were further divided into ten subgroups using the deciles of risk coefficients obtained from the XGBoost model. Patients with risk coefficients ranging from 0 to 0.1 belong to decile 1, from 0.1 to 0.2 were decile 2, …, and so on. The bars represent the predictive non-SVR accuracy in each subgroup. The red line represents the accumulated non-SVR rate. DAA, direct-acting antivirals; XGBoost, eXtreme Gradient Boosting; HCV, hepatitis C virus; SVR, sustained virological response.

Importance of predictors

The relative importance of DAA failure predictors was evaluated using the XGBoost algorithm in all cases. The x-axis represents the ratio of the number of times a variable is applied to the total number of trees. The top 12 predictors were body mass index, viral load, α-fetoprotein, bilirubin, platelets, FIB-4 index, creatinine, ALT, albumin, age, prothrombin time, and AST level (Supplementary Fig. 1).

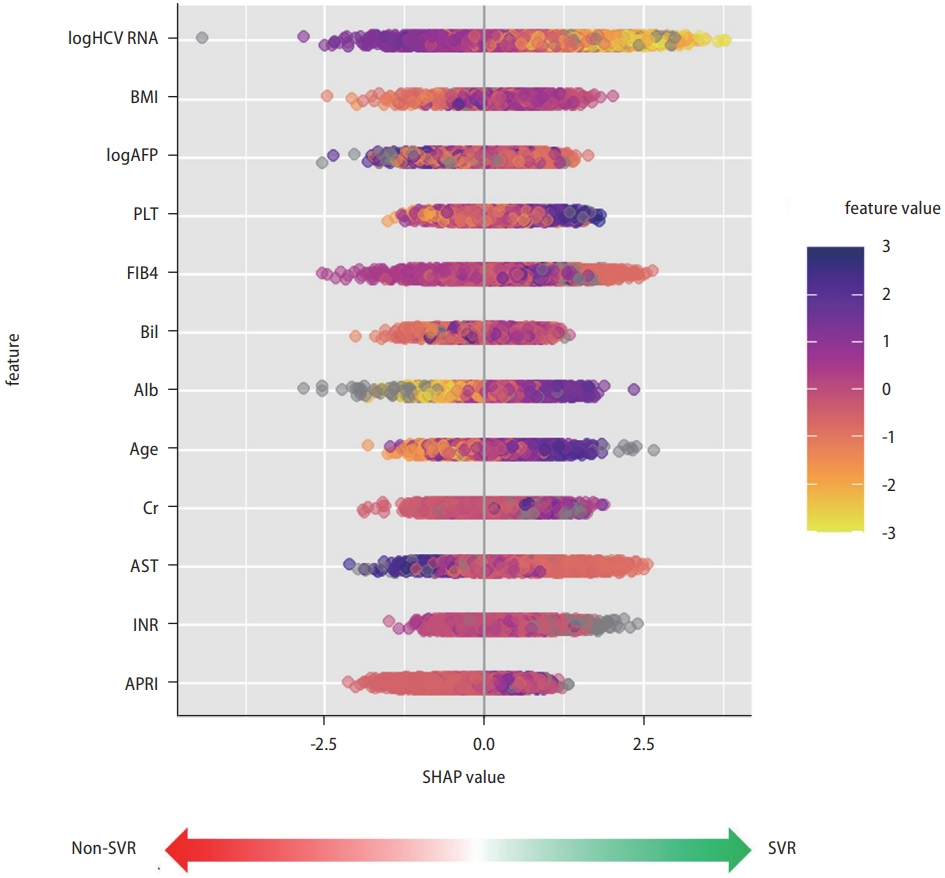

SHAP summary plot

Shapley Additive exPlanations (SHAP) was used to separately measure the contributions to the outcome from each feature [15]. Figure 3 shows the summary of the XGBoost model explainability with SHAP in all cases. SHAP>0 indicated a higher probability of SVR, while SHAP<0 indicated a higher chance of non-SVR. A dot represents a sample, and the colors represent feature values ranging from low (yellow) to high (purple). From the color distribution of the dots, we can deduce the effect of this feature on the DAA efficacy. For example, the purple dots for HCV RNA are concentrated at SHAP<0, indicating that a higher viral load increases the probability of treatment failure, whereas the yellow dots for albumin are concentrated at SHAP<0, indicating that subjects with low albumin levels are prone to treatment failure. In brief, elevated viral load, α-fetoprotein, FIB-4 index, bilirubin, and AST levels increase the risk of DAA failure. Subjects with a lower body mass index, platelets, albumin, and younger age had a lower probability of SVR.

SHAP summary plot. The SHAP summary plot combined the feature importance and effects on DAA efficacy in all cases. The x-axis represents the SHAP value of the feature. A SHAP value >0 represents a positive correlation with SVR, and a SHAP value <0 represents a negative correlation with SVR. The overlapping points jittered along the x-axis represent the samples; the colors represent feature values ranging from low (yellow) to high (purple). SHAP, Shapley additive explanations; DAA, direct-acting antivirals; SVR, sustained virological response; BMI, body mass index; AFP, α-fetoprotein; PLT, platelets; FIB-4, fibrosis-4 index; AST, aspartate aminotransferase; INR, international normalized ratio; APRI, aminotransferase to platelet ratio index.

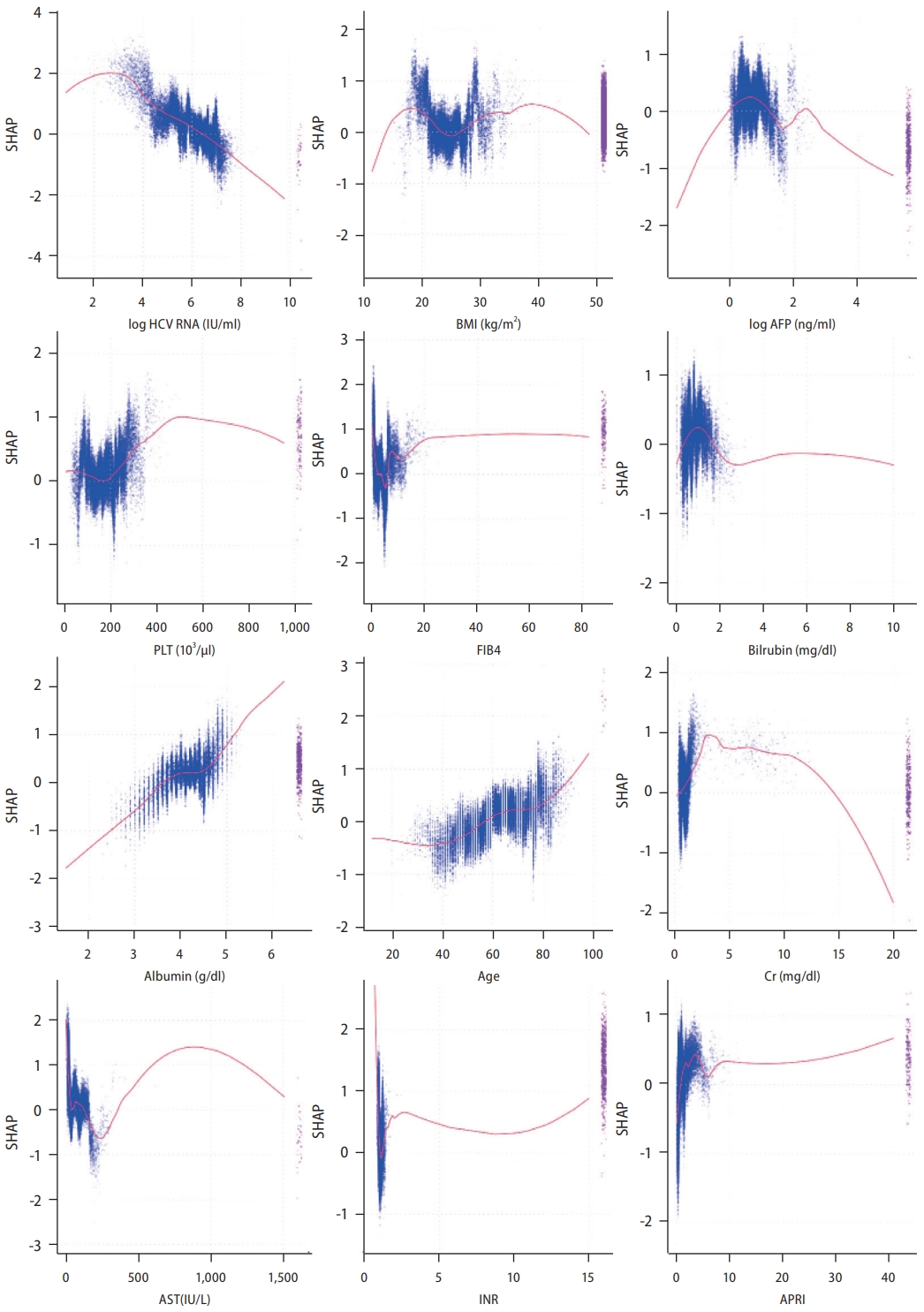

The detailed relationships between the features and the SVR are shown in Figure 4, revealing nonlinear relationships between the predictors and DAA efficacy. For example, HCV RNA levels <106 IU/mL increased the likelihood of SVR, and HCV RNA >2×106 IU/mL increased the risk of virological failure. Approximately, subjects aged <60 years, with serum bilirubin level >2 g/dL, albumin <3.5 g/dL, and creatinine level >15 mg/dL were prone to treatment failure. When AST ranges between 200–300 IU/L, treatment failure is more likely; in contrast, AST <100 or AST >400 IU/L implies a higher chance of achieving SVR.

SHAP dependence plot. SHAP dependence plot revealed that global model interpretations depend on the given features. SHAP, Shapley additive explanations; HCV, hepatitis C virus; BMI, body mass index; AFP, α-fetoprotein; PLT, platelets; FIB-4, fibrosis-4 index; AST, aspartate aminotransferase; INR, international normalized ratio; APRI, aminotransferase to platelet ratio index.

DISCUSSION

The nationwide TACR study investigated the risk factors for DAA treatment failure in Taiwanese patients. Multivariate regression analysis revealed that liver cirrhosis, HCC, poor DAA adherence, and high HbA1c levels were significantly associated with virologic failure. We developed an ML-based predictive model to identify potential treatment failure populations. The performance of the XGBoost model was superior to the other algorithms and the conventional logistic regression model. The AUROC of the XGBoost algorithm is 1.000 and 0.803 for the training and validation datasets, respectively. The AI predictive model successfully detected 69.7% of the subjects who failed to achieve SVR among the top five decile subgroups, thus implying that an AI-based model can effectively strengthen the decision-making process for antiviral therapy.

The AI model showed that subjects with features of liver cirrhosis prone to decompensation (i.e., higher FIB-4 index, bilirubin, and AST levels; lower albumin and platelets) were less likely to achieve SVR. Elevated AFP levels and decreased BMI (i.e., weight loss) are hallmarks of active HCC and predispose patients to treatment failure. In addition, HCV patients with a high baseline viral load had more difficulty in clearing the virus than those with a low viral load. These AI findings are consistent with those of the multivariate regression analysis. DAA adherence and HbA1c were not incorporated into the top 12 predictors in the AI model, possibly owing to collinearity between the above factors and other variables. Comorbid diabetes in HCV-infected patients is well-known to increase the risk of HCC [16,17]. A substantial proportion of decompensated patients who do not achieve SVR may experience adverse events or death-related early discontinuation [18]. Body mass index had J- or U-shaped associations with overall and all-cancer mortality rates. Compared with healthyweight individuals, life expectancy is shorter in underweight subjects [19,20]. Liver cirrhosis and HCC were not classified as significant risk factors using the XGBoost algorithm. Liver cirrhosis vs. FIB-4 index or HCC vs. AFP levels showed a certain degree of collinearity. In contrast, the viral load was not an independent risk factor using multivariate logistic analysis but became a significant predictor using the XGBoost approach, possibly resulting from the appropriate cutoff value of HCV RNA not being embedded in the regression model. Previous studies have shown that the SVR12 rate significantly decreased in the high baseline viral load group compared to that in the low viral load group. However, the optimal cutoff values for high vs. low viral loads vary across studies [21,22]. While pan-genotypic DAAs can be safely administered in traditionally difficult-to-treat HCV populations, managing patients with active HCC, decompensated liver cirrhosis, RASs, or prior DAA failure requires special attention [3]. There are substantial amounts of nonlinear data in clinical practice that are difficult to evaluate using conventional statistical methods. The SHAP dependence plot showed the relationship between SVR and the predictors (Fig. 4). Clinicians can realize the optimal range of significant variables that contribute to SVR. The AI predictive model can assist in discriminating high-risk patients and alert clinicians to identify risk factors before initiating DAA therapy.

The advantages of ML include flexibility and scalability, making it preferable for processing nonlinear big data [5]. The HCV-TARGET study in the United States and Europe (n=6,525) applied multiple algorithms (elastic net, neural network, random forest, and gradient boosting) to predict DAA treatment failure (C-index=0.64–0.69), superior to the multivariate logistic regression model (C-index=0.51) [23]. The HCV-TARGET study revealed that the top ten predictors were albumin, liver enzymes, bilirubin, sex, HCV RNA, sodium, HCC, platelet count, and tobacco use. The TACR and HCV-TARGET studies highlighted the vital roles of HCC and cirrhosis-related risk factors in DAA treatment failure. AI approaches confirmed the viewpoints of traditional statistics and further improved predictive performance compared with conventional statistical methods.

A meta-analysis revealed that patients with active HCC had a significantly lower SVR rate (73.1%) than those with inactive HCC (92.6%) or those without HCC (93.3%) [24]. The REAL-C study enrolled propensity score-matched HCV patients and confirmed that SVR rates were reduced in patients with active HCC but not those with inactive HCC (85.5% vs. 93.7%; P=0.03) [25]. Patients with active HCC may experience more adverse effects and early DAA discontinuation [26], which partially explains the suboptimal DAA response in this population. The mechanisms underlying suboptimal antiviral efficacy in patients with active HCC remain unclear, possibly attributed to the ineffective blood delivery of DAA to target sites [27] and impairment of host immunity in HCC patients [28]. HCC Patients with curative potential should be treated aggressively before DAA administration to ensure a greater chance of viral clearance. However, the optimal timing of DAA initiation in patients with incurable HCC remains controversial. Viral eradication significantly reduces mortality in patients with HCC receiving either curative or palliative HCC therapy [29], thus suggesting that antiviral therapy should not hesitate on those subjects not eligible for curative HCC treatment [30].

Previous studies have reported that the SVR rate is lower in HCV patients with decompensated cirrhosis than in patients with compensated cirrhosis [9,31]. The probability of achieving SVR varies based on the reserved liver function [32]. Patients with higher Model for End-Stage Liver Disease (MELD) scores (>20, Child-Turcotte-Pugh class C) had lower SVR rates, more adverse effects, and a lower likelihood of liver function improvement [33-35]. For patients with a MELD score ≥20, posttransplant HCV treatment is recommended, unless the expected waitlist time is more than six months. Patients with MELD scores <15 should be treated promptly. The grey zone of a MELD score, i.e., 15–19, requires tailored therapy on a case-by-case basis [11,36]. Our study provided information to identify patients with high risk of treatment failure. Selection of proper DAA regimens with high efficacy and safety profiles and enhancing DAA adherence might help to ensure treatment efficacy [9].

XGBoost is a supervised ML algorithm under a gradient boosting framework. The ensemble method combines multiple models to produce more accurate predictions. Gradient boosting is an ensemble technique that corrects mistakes in existing models by creating new models, which are sequentially added until no further improvement can be achieved [37]. This process is called gradient boosting because it utilizes a gradient descent method to minimize loss when creating new models [38]. Moreover, XGBoost supports both regression and classification tasks. XGBoost indeed exhibits an outstanding predictive ability compared to the other algorithms in our study.

ML models are usually considered “black boxes” because their prediction process is too complex for humans to interpret. To overcome this problem, explainable AI methods have been developed based on the Shapley methods [39]. SHAP is derived from the concept of cooperative game theory, which can calculate the contribution of each feature to the prediction. SHAP provides insight into the inner workings of a “black box” by generating quantitative visualizations of the prediction process. SHAP can reflect the influence of the features in each sample and show a positive or negative impact on the outcome [15]. Through the transformation of SHAP, users can better understand how the model makes predictions. Moreover, it provides feedback on the key factors contributing to the outcome and allows for the identification of potential biases. This transparency is crucial for convincing clinicians to rely on AI-based decision support systems [40]. “Explainable AI” may help bridge the gap between the medicine and AI-predictive models.

The current study has several limitations. Some patients (e.g., hepatic decompensation, HCC) may not survive long enough to obtain SVR12 data. This population may have relatively unfavorable prognostic factors, leading to a suboptimal treatment response. Although RASs have been confirmed to be associated with viral resistance [2,41], RAS testing is not recommended in routine clinical practice [42]. Considering only 7.0% of the RASs were available in the TACR database, this predictive model may underestimate the impact of RASs on the DAA response. The performance of the validation dataset is inferior to that of the training dataset. Potential overfitting and heterogeneity in the training dataset may affect model generalizability. However, avoiding overfitting may reduce the accuracy of ML models. In such an imbalanced dataset (non-SVR rate=1.6%), we expect the accuracy of the training dataset to be at least >98.4%. Under this premise, the hyperparameter tuning of each AI model was relatively limited, making it difficult to avoid overfitting. As the generalization is suboptimal, the present AI model should be further further modified before being applied to other independent cohorts. A relatively small number of DAA failure events may limit the development of a robust model. There is no universal approach to missing data imputation—a fundamental concern in real-world clinical datasets. The input data for the AI analysis contained only clinical and virological data in the current study. A combination of genomics, proteomics, and metabolomics may improve the predictive accuracy of the validation datasets in the future.

In conclusion, this nationwide TACR study applied ML algorithms for risk stratification in DAA failure. The performance of the AI model is superior to that of the conventional logistic regression model. The XGBoost model showed that subjects with features such as higher HCV RNA levels, active HCC, or decompensated liver cirrhosis were less likely to achieve SVR. This model captured 69.7% of patients who failed to achieve SVR among the top five decile subgroups. ML algorithms facilitate risk stratification in DAA failure and provide additional information on factors associated with DAA failure.

Notes

Authors’ contribution

M.Y.L analyzed the data and wrote the manuscript. C.F.H, C.H.H, C.M.T, L.R.M, H.T.K, K.C.T, C.C.L, M.J.B, S.J.W, J.F.H, M.L.Y, C.T.C, M.C.T, C.W.H, P.L.L, T.H.Y, Y.H.H, L.W.C, C.L.C, C.C.Y, S.S.Y, P.N.C, T.Y.H, J.T.H, W.C.W, C.Y.C, G.Y.C, G.X.Z, W.L.T, C.N.K, C.L.L, C.C.W, T.Y.L, C.L.L, W.W.S, T.H.L, T.S.C, C.J.L, C.Y.D, J.H.K, H.C.L, W.L.C, C.Y.P, and C.Y.C collected the clinical data. C.W.T confirmed the machine learning analysis. M.L.Y designed the study, interpreted data, and supervised the manuscript. All authors have approved the final version of the manuscript.

Conflicts of Interest

Ming-Lung Yu disclosed the following: research grant from Abbvie, Gilead, Merck, and Roche diagnostics; consultant for Abbvie, BMS, Gilead, Roche, and Roche diagnostics; and speaker for Abbvie, BMS, Eisai, Gilead, Roche, and Roche diagnostics.

Acknowledgements

This work was supported partially by the “ Center For Intelligent Drug Systems and Smart Bio-devices (IDS2B) “ and the “ Center of Excellence for Metabolic Associated Fatty Liver Disease, National Sun Yat-sen University, Kaohsiung” from The Featured Areas Research Center Program within the framework of the Higher Education Sprout Project by the Ministry of Education (MOE) in Taiwan, and grants from MOST 111-2314-B-037-069-MY2, MOHW111-TDU-B-221-114007, KMUH-DK(B)111002-1, KMHK-DK(C)111004, and KMHKDK(C)111006, KMU-TC111B04, KMU-TC111A04, NSTC 112-2321-B-001-006 and MOHW112-TDU-B-221-124007.

Abbreviations

AFP

α-fetoprotein

AI

artificial intelligence

ALT

alanine aminotransferase

ANN

artificial neural network

AST

aspartate aminotransferase

AUROC

area under the receiver operator characteristic curve

BMI

body mass index

DAA

direct-acting antivirals

DT

decision tree

FIB-4

fibrosis-4 index

HbA1c

hemoglobin A1c

HBV

hepatitis B virus

HCC

hepatocellular carcinoma

HCV

hepatitis C virus

INR

international normalized ratio

KNN

k-nearest neighbor

LC

liver cirrhosis

ML

machine learning

RAS

resistance-associated substitutions

RF

random forest

SHAP

Shapley additive explanations

SVR

sustained virological response

TACR

Taiwan HCV registry program

XGBoost

eXtreme Gradient Boosting

SUPPLEMENTAL MATERIAL

Supplementary material is available at Clinical and Molecular Hepatology website (http://www.e-cmh.org).

The input features in the machine learning model

Performance of XGBoost for predicting DAA treatment response in the overall cases

Importance of predictors. “Frequency” represents the ratio of the number of times a variable is used to the number of trees. BMI, body mass index; HCV, hepatitis C virus; AFP, α-fetoprotein; PLT, platelets; FIB-4, fibrosis-4 index; ALT, alanine aminotransferase; INR, international normalized ratio; AST, aspartate aminotransferase; APRI, aminotransferase to platelet ratio index; LDL, low-density lipoprotein; DAA, direct-acting antivirals; HbA1c, hemoglobin A1c; TG, triglyceride; rGT, gamma-glutamyltransferase; HDL, high-density lipoprotein; RVR, rapid virologic response; HBsAg, hepatitis B surface antigen; HIV, human immunodeficiency virus; HTN, hypertension; CKD, chronic kidney disease; RBV, ribavirin; HCC, hepatocellular carcinoma; LC, liver cirrhosis; HLD, hyperlipidemia; DM, diabetes; SAE, serious adverse event; RAS, resistance-associated substitutions; PWID, persons who inject drugs; CAD, coronary artery disease; DLC, decompensated liver cirrhosis; CLD, chronic liver disease; CVA, cerebrovascular accident.

Machine learning code

References

Article information Continued

Notes

Study Highlights

• Approximately 1–3% of HCV patients experience DAA therapy failure. We conducted a nationwide study using AI to investigate the risk factors for DAA failure. The AI models outperformed the conventional logistic regression models. The AI model showed that subjects with features such as high HCV RNA levels, active hepatocellular carcinoma, or decompensated liver cirrhosis were prone to virological failure. Machine learning algorithms facilitate risk stratification in DAA failure and provide additional information on factors associated with DAA failure.